To run the applications written in Python or R, additional installations are required. A compatible Java virtual machine (JVM) is sufficient to launch the Spark Standalone cluster and run the Spark applications. Prerequisites For Apache SparkĪpache Spark is developed using Java and Scala languages. Also has Spark Streaming for data stream processing using DStreams (old API) and Structured Streaming for structured data stream processing that uses Datasets and Dataframes (newer API than DStreams). Spark also provides a higher level of components that includes Spark SQL for SQL structured data, Spark MLlib for machine learning, GraphX for graph processing. Capable of batch and real-time processing it provides high-level APIs in Java, Scala, Python, and R for writing driver programs. It is an open-source distributed computing framework for processing large volumes of data.

Install apache spark mac os how to#

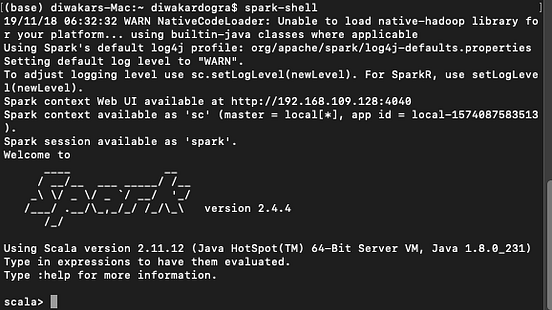

You will also find out how to submit the Spark application to this cluster and submit jobs interactively using the spark-shell feature. I’ll also talk about how to start and stop the cluster. In this blog, I will take you through the process of setting up a local standalone Spark cluster. With its in-memory computation capability, it has become one of the fastest data processing engines. It is one of the most popular Big Data frameworks that can be used for data processing.

Since the release of Apache Spark, it has been meeting the expectations of organizations in a great way when it comes to querying, data processing, and generating analytics reports quickly.

0 kommentar(er)

0 kommentar(er)